Bounty hunters for science

25 Aug 2024Last year we learned that 20 papers from Hoau-Yan Wang, an influential Alzheimer’s researcher, were marred by doctored images and other scientific misconduct. Shockingly, his research led to the development of a drug that was tested on 2,000 patients. A colleague described the situation as “embarrassing beyond words”.

We are told that science is self-correcting. But what’s interesting about this case is that the scientist who uncovered this fraud was not driven by the usual academic incentives. He was being paid by Wall Street short sellers who were betting against the drug company!

This was not an isolated incident. The most notorious example of Alzheimer’s research misconduct – doctored images in Sylvain Lesné’s papers – was also discovered with the help of short sellers.

These cases show how a critical part of the scientific ecosystem – the exposure of faked research – can be undersupplied by ordinary science. Unmasking fraud is a difficult and awkward task, and few people want to do it. But financial incentives can help close those gaps.

Financial incentives could plug other gaps in science, including areas unrelated to research misconduct. In particular, science could benefit from more bounty programs. Here are three ways such programs could be put to use.

Bounties for fraud whistleblowers

People who witness scientific fraud often stay silent due to perceived pressure from their colleagues and institutions. Whistleblowing is an undersupplied part of the scientific ecosystem.

As I argued last week, we can correct these incentives by borrowing an idea from the SEC, whose bounty program pays whistleblowers 10-30% of the fines imposed by the government. The program has been a huge success, catching dozens of fraudsters and reducing the stigma around whistleblowing. The Department of Justice has recently copied the model for other types of fraud, such as healthcare fraud. I hope they will extend the program to scientific fraud.

- Funder: The US Government

- Eligibility: Research employees with insider knowledge

- Cost: The program pays for itself, as bounties are a percentage of the funds reclaimed by the government.

Bounties for spurious artifact detection in the social sciences

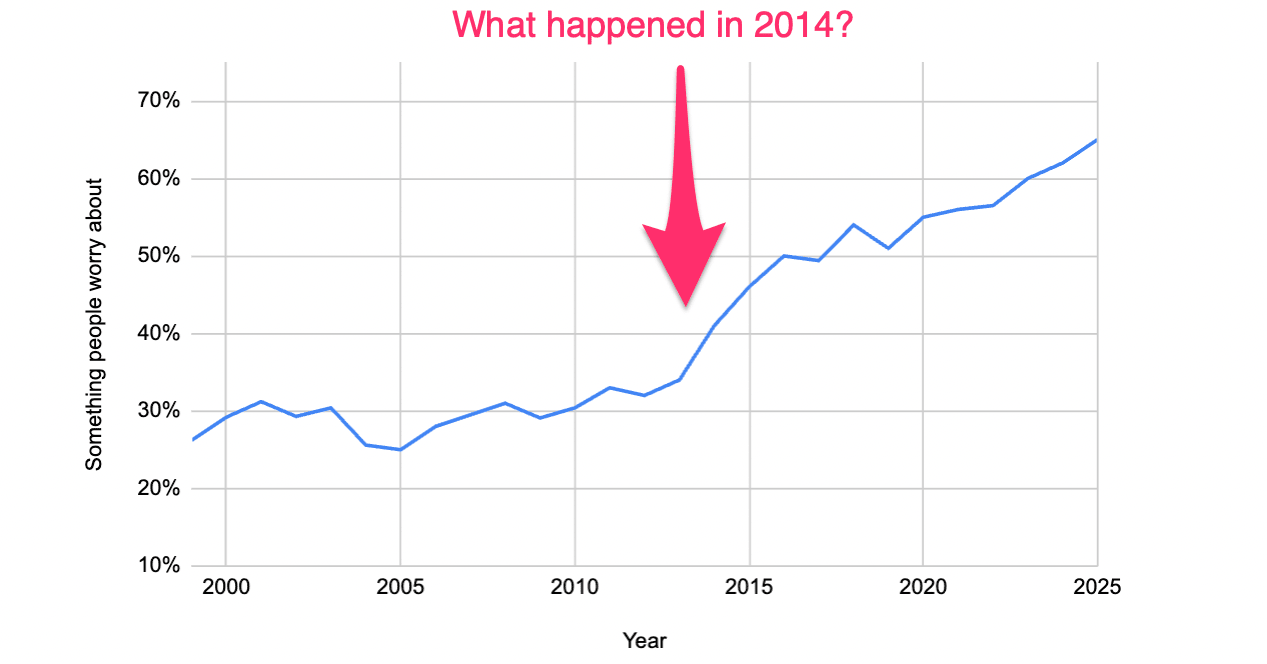

Sometimes I’ll see an interesting trend graph circulating around the internet, showing that some social problem has increased or decreased. People spend years debating the causes until eventually we learn it was due to a change in how the data was counted.

For instance, we recently learned that the increase in U.S. maternal mortality was caused by more states adding a pregnancy checkbox in death certificates. Similarly, part of the increase in teen suicide-related hospital visits was due to new coding rules for suicidal ideation and changes in screening recommendations. Both of these revelations emerged several years after the trends had been publicized and debated.

It’s fun to debate grand theories about major social changes, but it’s less fun to pore over obscure changes to record-keeping practices. We could fix these incentives by offering bounties to anyone who can identify these types of artifacts in trends of major interest. Bounties could target specific trends, with payouts based on how persuasive the case is or how much of the trend can be explained. Anyone would be eligible, including non-scientists who may better understand where the data comes from.

- Funder: Private philanthropy, perhaps Our World in Data

- Eligibility: Everyone is eligible, not just scientists

- Cost: $1000-$5000 per trend

Bounties to catch non-transparent deviations from preregistration

Researchers often deviate from pre-registered analysis plans. This is often necessary for clearly labeled exploratory analysis or for situations where the pre-registration was unclear and the deviation is explained transparently. But it becomes misleading when deviations are not transparent.

Spotting these deviations is undersupplied, as most readers and reviewers do not have enough incentive to inspect research plans. A bounty program could plug this gap. Sponsors would place bounties on high-profile influential papers, with rewards based on the severity of the deviation. Papers with significant deviations could be listed on a public website.

Not only would this program identify influential pre-registered papers that are not as reliable as we think, it would also incentivize future researchers to be more transparent and stick to their original plans where appropriate.

- Funder: Private philanthropy

- Eligibility: Everyone is eligible, not just professional scientists

- Cost: $200-500 per paper found with a deviation

Some critics might say that science works best when it’s driven by people who are passionate about truth for truth’s sake, not for the money. But by this point it’s pretty clear that like anyone else, scientists can be driven by incentives that are not always aligned with the truth. Where those incentives fall short, bounty programs can help. We’re already beginning to see some programs, like Error, start to emerge. I hope they succeed and that more models will be developed.

Special thanks to Tim Vickery and Alex Holcombe for discussions.